New Tech May Make Prosthetic Hands Easier for Patients to Use

Researchers have developed new technology for decoding neuromuscular signals to control powered, prosthetic wrists and hands. The work relies on computer models that closely mimic the behavior of the natural structures in the forearm, wrist and hand. The technology could also be used to develop new computer interface devices for applications such as gaming and computer-aided design (CAD).

The technology has worked well in early testing but has not yet entered clinical trials – making it years away from commercial availability. The work was led by researchers in the joint biomedical engineering program at North Carolina State University and the University of North Carolina at Chapel Hill.

Current state-of-the-art prosthetics rely on machine learning to create a “pattern recognition” approach to prosthesis control. This approach requires users to “teach” the device to recognize specific patterns of muscle activity and translate them into commands – such as opening or closing a prosthetic hand.

“Pattern recognition control requires patients to go through a lengthy process of training their prosthesis,” says He (Helen) Huang, a professor in the joint biomedical engineering program at North Carolina State University and the University of North Carolina at Chapel Hill. “This process can be both tedious and time-consuming.

“We wanted to focus on what we already know about the human body,” says Huang, who is senior author of a paper on the work. “This is not only more intuitive for users, it is also more reliable and practical.

“That’s because every time you change your posture, your neuromuscular signals for generating the same hand/wrist motion change. So relying solely on machine learning means teaching the device to do the same thing multiple times; once for each different posture, once for when you are sweaty versus when you are not, and so on. Our approach bypasses most of that.”

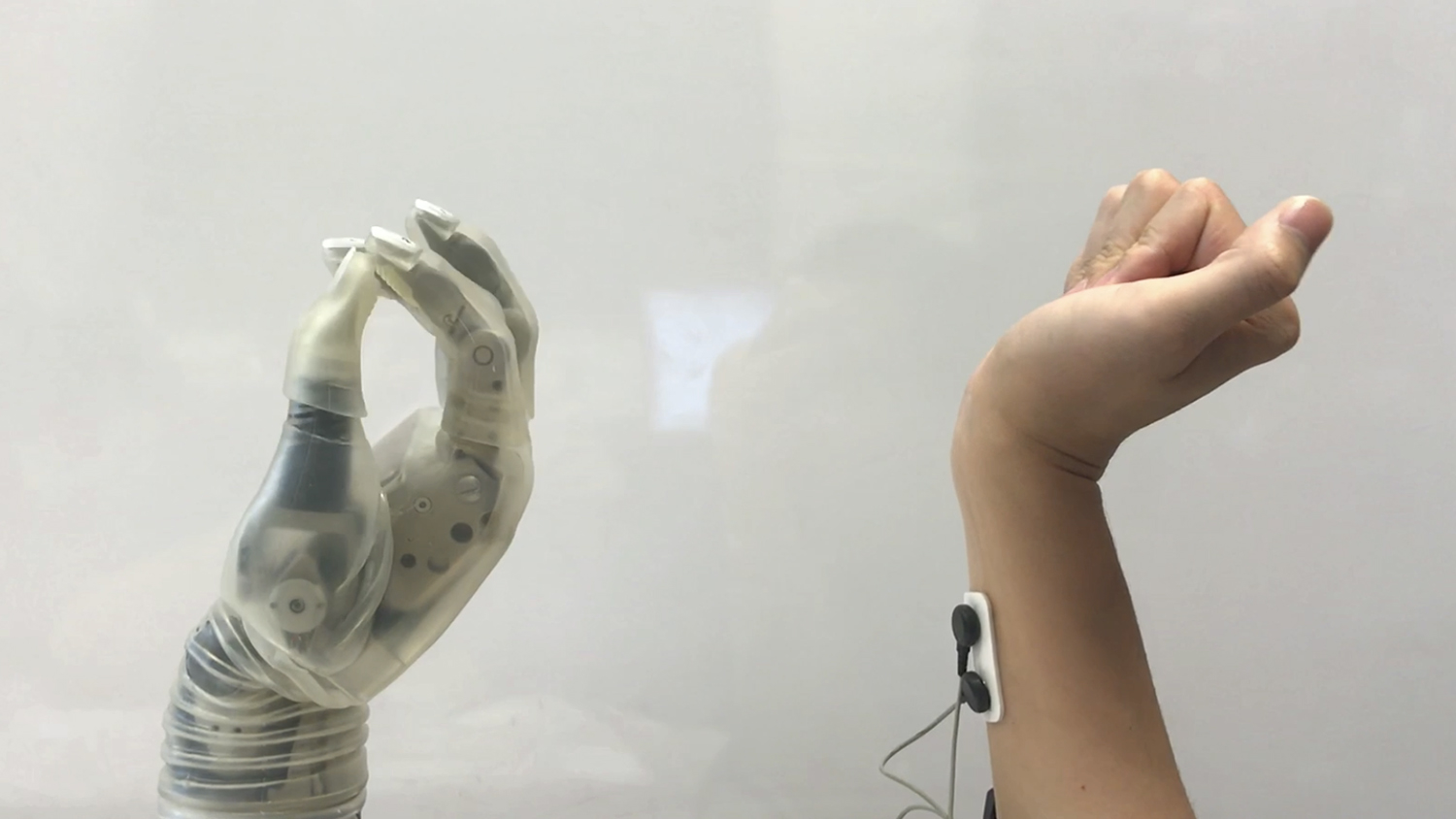

Instead, the researchers developed a user-generic, musculoskeletal model. The researchers placed electromyography sensors on the forearms of six able-bodied volunteers, tracking exactly which neuromuscular signals were sent when they performed various actions with their wrists and hands. This data was then used to create the generic model, which translated those neuromuscular signals into commands that manipulate a powered prosthetic.

“When someone loses a hand, their brain is networked as if the hand is still there,” Huang says. “So, if someone wants to pick up a glass of water, the brain still sends those signals to the forearm. We use sensors to pick up those signals and then convey that data to a computer, where it is fed into a virtual musculoskeletal model. The model takes the place of the muscles, joints and bones, calculating the movements that would take place if the hand and wrist were still whole. It then conveys that data to the prosthetic wrist and hand, which perform the relevant movements in a coordinated way and in real time – more closely resembling fluid, natural motion.

“By incorporating our knowledge of the biological processes behind generating movement, we were able to produce a novel neural interface for prosthetics that is generic to multiple users, including an amputee in this study, and is reliable across different arm postures,” Huang says.

And the researchers think the potential applications are not limited to prosthetic devices.

“This could be used to develop computer-interface devices for able-bodied people as well,” Huang says. “Such as devices for gameplay or for manipulating objects in CAD programs.”

In preliminary testing, both able-bodied and amputee volunteers were able to use the model-controlled interface to perform all of the required hand and wrist motions – despite having very little training.

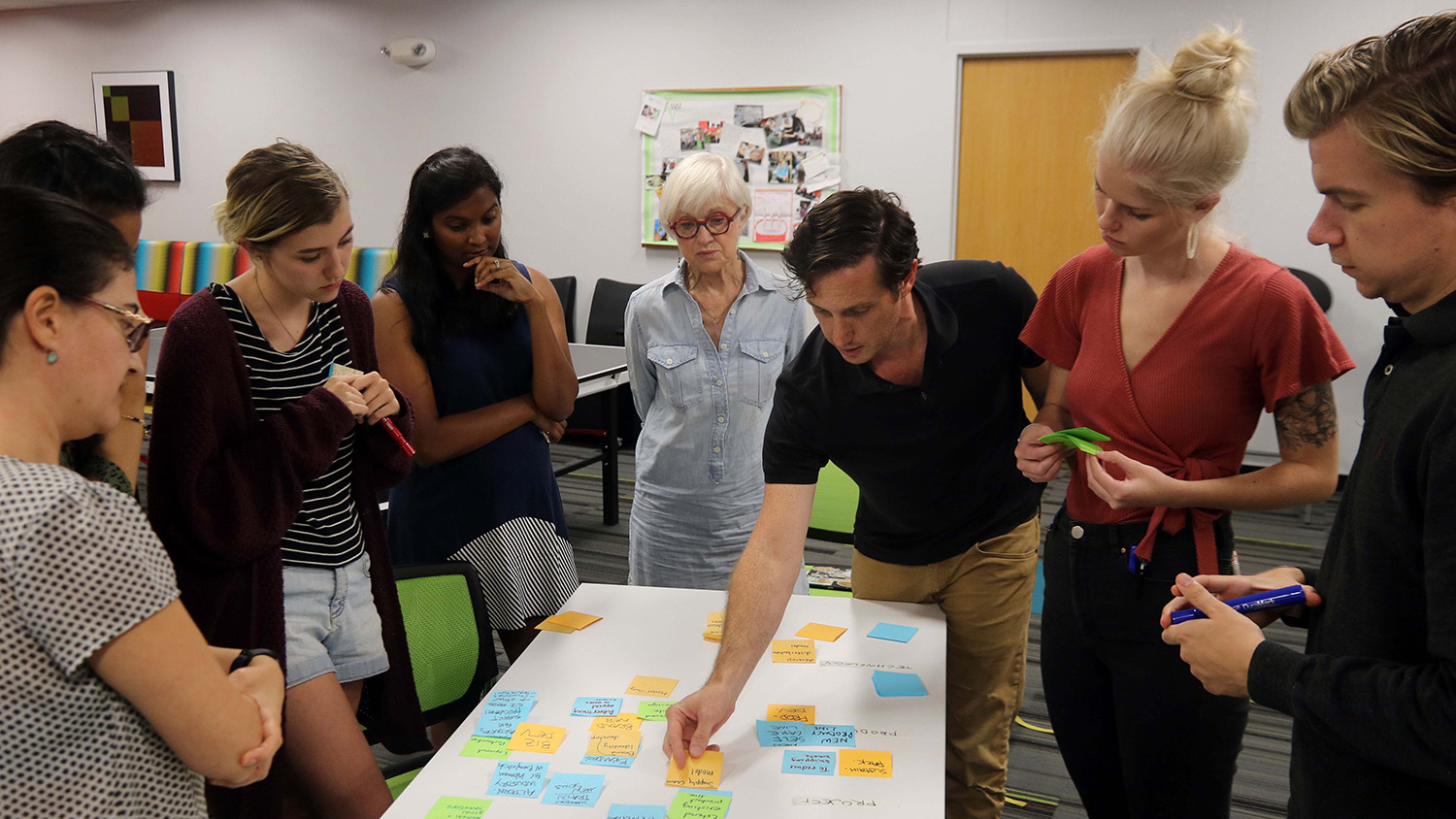

“We’re currently seeking volunteers who have transradial amputations to help us with further testing of the model to perform activities of daily living,” Huang says. “We want to get additional feedback from users before moving ahead with clinical trials.

“To be clear, we are still years away from having this become commercially available for clinical use,” Huang stresses. “And it is difficult to predict potential cost, since our work is focused on the software, and the bulk of cost for amputees would be in the hardware that actually runs the program. However, the model is compatible with available prosthetic devices.”

The researchers are also exploring the idea of incorporating machine learning into the generic musculoskeletal model.

“Our model makes prosthetic use more intuitive and reliable, but machine learning could allow users to gain more nuanced control by allowing the program to learn each person’s daily needs and preferences and better adapt to a specific user in the long term,” Huang says.

The paper, “Myoelectric Control Based on A Generic Musculoskeletal Model: Towards A Multi-User Neural-Machine Interface,” is published in the journal IEEE Transactions on Neural Systems and Rehabilitation Engineering. Lead author of the paper is Lizhi Pan, a postdoctoral researcher in Huang’s lab. The paper was co-authored by Dustin Crouch, a former postdoctoral researcher in Huang’s lab who is now at the University of Tennessee. The work was done with support from the Defense Advanced Research Projects Agency under grant N66001-16-2-4052; the National Science Foundation under grants 1527202 and 1637892; the National Institute on Disability, Independent Living, and Rehabilitation Research under grant 90IF0064; and the Department of Defense under grants W81XWH-15-C-0125 and W81XWH-15-1-0407.

-shipman-

Note to Editors: The study abstract follows.

“Myoelectric Control Based on A Generic Musculoskeletal Model: Towards A Multi-User Neural-Machine Interface”

Authors: Lizhi Pan, Dustin L. Crouch, and He Huang, North Carolina State University and University of North Carolina at Chapel Hill; Dustin L. Crouch is currently with the University of Tennessee, Knoxville

Published: May 18, IEEE Transactions on Neural Systems and Rehabilitation Engineering

DOI: 10.1109/TNSRE.2018.2838448

Abstract: This study aimed to develop a novel electromyography (EMG)-based neural-machine interface (NMI) that is user-generic for continuously predicting coordinated motion between metacarpophalangeal (MCP) and wrist flexion/extension. The NMI requires a minimum calibration procedure that only involves capturing maximal voluntary muscle contraction for the monitored muscles for individual users. At the center of the NMI is a user-generic musculoskeletal model based on the experimental data collected from 6 able-bodied (AB) subjects and 9 different upper limb postures. The generic model was evaluated on-line on both AB subjects and a transradial amputee. The subjects were instructed to perform a virtual hand/wrist posture matching task with different upper limb postures. The on-line performance of the generic model was also compared with that of the musculoskeletal model customized to each individual user (called “specific model”). All subjects accomplished the assigned virtual tasks while using the user-generic NMI, although the AB subjects produced better performance than the amputee subject. Interestingly, compared to the specific model, the generic model produced comparable completion time, a reduced number of overshoots, and improved path efficiency in the virtual hand/wrist posture matching task. The results suggested that it is possible to design an EMG-driven NMI based on a musculoskeletal model that could fit multiple users, including upper limb amputees, for predicting coordinated MCP and wrist motion. The present new method might address the challenges of existing advanced EMG-based NMI that require frequent and lengthy customization and calibration. Our future research will focus on evaluating the developed NMI for powered prosthetic arms.

This post was originally published in NC State News.

- Categories: